There are people (apparently, @Graptemys) who edit on a very large scale, “bump into” very large data like these, and find them to be a “beast” for their machine, causing difficulty. That’s a place we can all agree happened. Whether simplification is a solution or the best solution is where we don’t agree.

While it’s true for most OSM human editors, (I keep saying “human” before “editor” to distinguish a “software” editor, like JOSM or iD), not everybody who edits OSM is a “local activity” (human) editor. SOMEbody had to enter these data, and others will likely need to maintain them as they “bump into” them (e.g. splitting a way to become part of another boundary).

I suppose what this comes down to is “when is big TOO big?” and “when is ‘rough’ (or ragged, or coarse…) TOO rough?” As I’ve said, OSM doesn’t have (strictly defined, strictly enforced) data quality standards. Though, this thread seems to offer significant voices asking the question, “Well, should we?”

I might imagine (as they already exist) good data practices like “no more than 1800 (1801? 1900? 2000?) nodes in a way” as it may be that somebody a while ago found good numbers like that to use so as to minimize our software choking on data bigger than those (in “typical” hardware environments). But as to precision, or “hugging a coastline within so many centimeters” or “with no more than 0.5 meters (or 1, or 2.5 or 3.0…) of distance between nodes…” OSM has no such rules, at least any that are hard-and-fast or enforceable. But that also means somebody could clean them up (reduce nodes, as @Graptemys originally suggested back in the initial post), as long as “not too much precision is thrown away,” without really offending OSM. But look: what happened is this thread, a solicitation to our community of “what should we do here?” I don’t think the suggestion that we simplify existing data is outrageous, in fact, it is quite reasonable in my opinion. What I have found more fascinating than the technical results (unfortunately so far, wholly undetermined) is the seeming difficulty the community has in concluding what we might do about such “beasts.” (The word “beast” has been used before in other Discourse threads about such data, as they exist in many places in our map).

So: it seems understood that “if you are going to ride the ride, you really should be ‘at least this’ tall” (lots of RAM helps, even knowing not everybody has gobs of RAM). I think this is what @dieterdreist means by “dedicated tools.” But what we don’t seem to have addressed is “what if I find one of these and it is seriously over-noded in my opinion, can I ‘clean it up’ (using something like JOSM’s Simplify Way)? Must I consult with the community first?” I realize this is always a good idea, but look where it got @Graptemys : a very long thread, and not a lot of consensus.

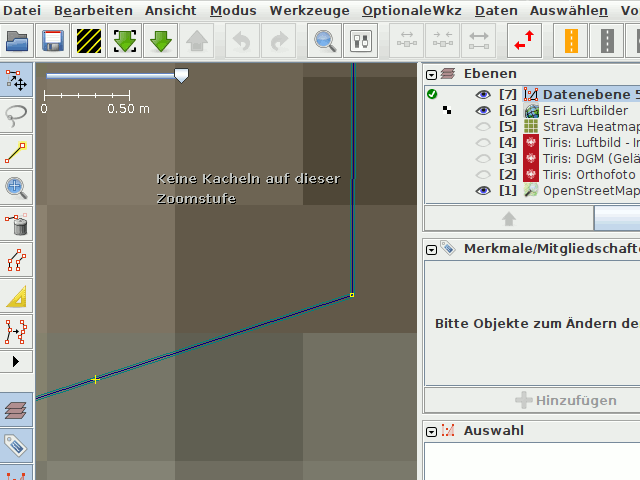

![]() (Screenshot from a place where the outline of that lake in canada does not match with any aerial available to me. There are water waves close by, that make even the current level of detail unreal.)

(Screenshot from a place where the outline of that lake in canada does not match with any aerial available to me. There are water waves close by, that make even the current level of detail unreal.)